Test stability analysis

Since tests are used for determining the quality of your project, it is quite important for the tests themselves to be stable.

Unstable behavior of a test may interfere with the test results, making the whole report less trustworthy and giving the QA team “alarm fatigue.” It may also have a measurable negative impact on the team's productivity, as the time spent on chasing non-existing bugs will not be spent on real ones.

Ideally, each test should be consistent: it should yield a different status only when the system under test behaves differently. When a test fails, you should be able to reflect this in your team's priorities immediately instead of hoping that the test will pass again next time.

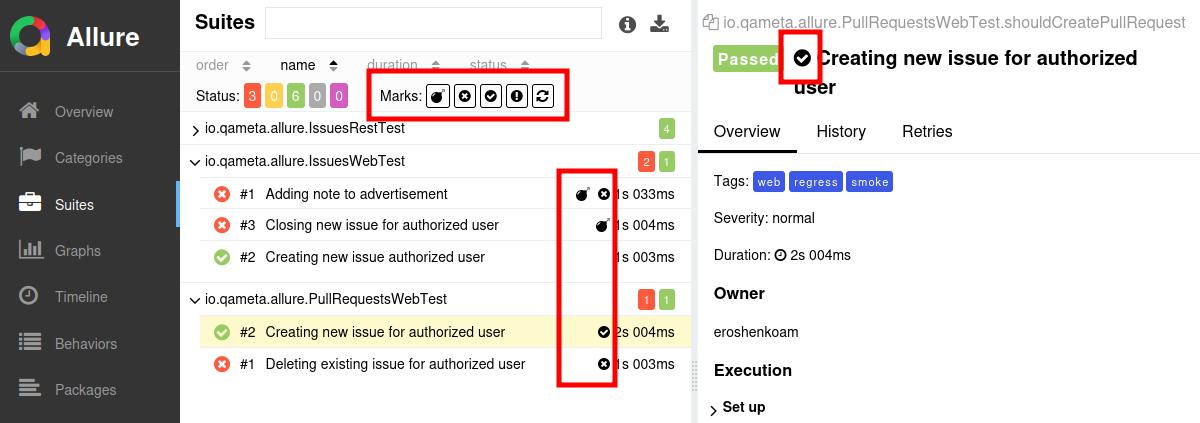

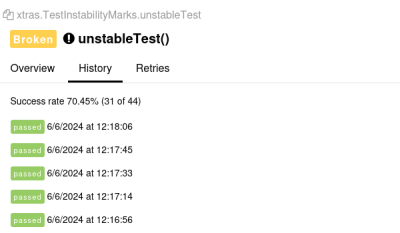

Allure Report helps you find potentially unstable tests by assigning test instability marks to them. There are 5 types of instability marks: Flaky, New failed, New passed, New broken, and Retried.

The marks are displayed next to the tests in the trees and on the details panel. You can also filter tests by their marks.

Flaky tests

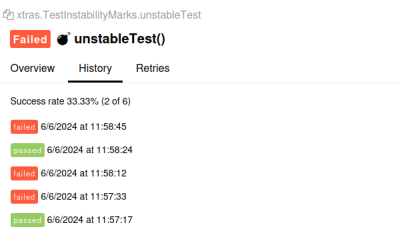

The Flaky mark is automatically added to a test if all the following conditions are met:

- the test got the Failed status at some point within the latest 5 launches,

- the test got the Passed status at least one time since then,

- the test got the Failed or Broken status in the latest launch.

The tests history must be enabled for Allure Report to add this mark. Some Allure adapters also provide a way to mark a problematic test as Flaky manually, see the documentation for your Allure adapter.

For dealing with flaky tests in your project, we recommend a 4-step process: investigate everything, fix what can be fixed, retry the rest, and keep monitoring.

Investigate why the tests are unstable.

A flaky test may indicate a wide range of problems with the test code, the test environment or the product itself. Various tools in Allure Report can help you in your test result analysis.

On the Behaviors or Suites tab, click Show Flaky test results to see which features or suites contain more flaky tests than the others. For example, if the majority of flaky tests are related to a recently introduced feature in your application, you may need to investigate this feature more closely.

On the Categories tab, click Show Flaky test results to see if the flaky tests have similar error messages and stack traces or not. For example, if you see the same timeout error across different flaky tests, it may be caused by an external server being unavailable and not some test-specific issues.

For individual tests that are not parts of larger trends, consider using steps and attachments to narrow down the moments something goes wrong during the execution.

If possible, fix the source of instability.

Once the source of instability is detected, we recommend to invest your resources in fixing it. This is important even for less critical bugs, as the inability to reliably run the test can prevent you from finding a more critical problem in the future.

If the instability is caused by the test code or the environment, fixing it may involve rearranging the test, rewriting some assertions, introducing mock servers or doubles instead of external services the test depends on, etc. When different tests have a lot of common codebase, it is possible that the underlying issue will improve multiple tests at once. Implementing all the changes may take some time, but it also can save a lot of time in the future, as the team won't have to analyze this flaky test result again.

Sometimes, the source of instability is the product itself. For example, flaky tests may appear because of memory leaks or data races. If you can't fix the problem now, try to reproduce it reliably. You may need to write a new test for that. This way, even when all the flaky tests happen to look “green” in a future report, you will see at least one “red” test result to inform you that the issue is still present.

If the test is still flaky, enable retries.

If you can't fix the source of flakiness right now, consider enabling an auto-retry mechanism. For example, set it up to run the test up to 5 times when it fails, thus increasing the flaky test's chances to pass — thus saving your time on future test analysis. For example, if your test tries to connect to an external server and gets a timeout error, it is likely caused by a temporary problem which may be solved by the time you auto-retry the test in a few seconds.

Many test frameworks provide easy-to-use annotations or similar constructions for setting failing tests to retry automatically when they fail.

When you retry the whole test, its test status will be determined by the latest attempt, with other attempts available via the Retries tab. Alternatively, implement a retry logic just for the unstable part of your test, logging each attempt with a step status. This way, the test will only deal with known sources of instability and won't hide one that is yet unknown.

Keep monitoring your flaky tests.

In each test report, make sure to analyze all flaky tests, even the ones you analyzed in previous reports. For example, it is possible that previously a test failed early because of an unavailable service, but now it fails for a completely different cause, and it is important to analyze the new cause. Consider defining custom categories to distinguish between known and unknown causes.

New failed tests

The New failed mark is automatically added to a Failed test if it had any other status in the previous launch.

The tests history must be enabled for Allure Report to add this mark.

When confronted by a New failed test, start by reviewing recent changes in the product codebase, the test codebase, their dependencies or the external resources they rely on. Go to the Categories tab to see if the new issue is unique or common across different tests.

If the issue seems to be caused by a change, treat is as a defect and try to fix it if possible.

If the issue is not related to any recent changes, this basically means that the test is on its way to being recognized as Flaky. A good way to start the investigation is by running the test again (for example, on your local computer). See if it fails consistently, and if it does not, see the recommendations for Flaky tests.

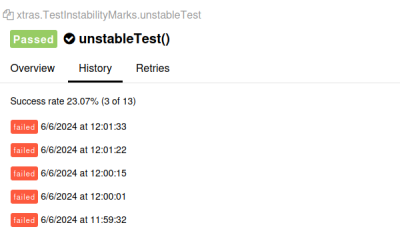

New passed tests

The New passed mark is automatically added to a Passed test if it had any other status in the previous launch.

The tests history must be enabled for Allure Report to add this mark.

When appearing unexpectedly, New passed tests usually should raise the same concerns as New failed tests.

Review any recent changes in the product, the test, or the environment to see if they could cause the change of the status. If they did, that's good news, but if not, this may mean the test is Flaky and that other passed tests might be less trustworthy, too.

We also recommend re-running the tests (for example, on your local computer) to see if they pass consistently or if they go back to the previous status. In the latter case, see the recommendations for Flaky tests.

New broken tests

The New broken mark is automatically added to a Broken test if it was not broken in the previous launch.

The tests history must be enabled for Allure Report to add this mark.

Just like a New failed test, a New broken test can indicate a new bug or an instability. Check if the recent changes cause the issue. If that's not the case, see the recommendations for Flaky tests.

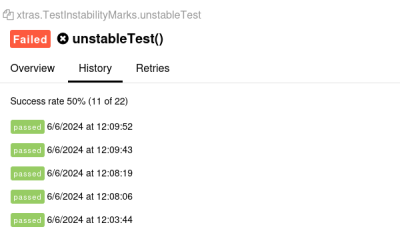

Retried tests

The Retried mark is automatically added to a test if it was run multiple times during the test launch and it got at least two different statuses between them.

Unlike the other marks, Allure Report does not need access to earlier launches' history to add the Retried mark. It only takes into account all results of the test from the current launch. See Retries for more details.

Depending on the test framework configuration and the nature of the tests themselves, the Retried status may or may not indicate a problem. For example, if a test depends on an external web service, the Passed status with the Retried mark may be completely acceptable. On the other hand, a test that only works with the product's own functionality is probably expected to pass each time, so the Retried mark on it probably needs further investigation.

Make sure to keep an eye on these tests every once in a while. Just like a Flaky test, a Retried test's instability may have multiple sources, some of which might've not been analyzed yet.